Reading Time : 1 Mins

5 Critical Steps For Effective Data Cleaning

I write about fintech, data, and everything around it

Data cleaning is a very important first step of building a data analytics strategy. Knowing how to clean your data can save you countless hours and even prevent you from making serious mistakes by selecting the wrong data to prepare your analysis, or worse, drawing the wrong conclusions. Learn the 5 critical steps for effective data cleaning.

Data is power. It’s one of the most precious resources we have, but many don’t understand how to use it properly. The ability to collect and process information is now widely available to everyone. However, in our race to create more ‘big data’, we mustn’t lose sight of the fact that raw data doesn’t mean anything particularly useful on its own. In order to make use of data, we must first analyze it and then act accordingly.

And data cleaning is the first step of any data analysis work and can account for up to 80% of your time. Selecting the wrong data can waste your time and even cause serious mistakes and false conclusions if you are not careful in selecting the right data to prepare and analyze your data.

Data cleaning: Introduction

Data cleaning is a process of preparing data, either manually or automatically, with the intention of improving its quality and making it suitable for analysis.. It involves identifying and handling invalid, incomplete, or inconsistent data. Data cleaning is a necessary step in any data analysis project. Alteryx is a popular data analytics and data science tool used these days, Alteryx training certification from a reputed institute could definitely be a valuable asset.

There are many different approaches to data cleaning. The most important thing is to be systematic and consistent in your approach. Here are some best practices for data cleaning:

Identify the source of your data: This will help you determine what kind of cleaning is needed.

Document everything: Keep track of the steps you take to clean your data. This can help you with the work that you have done. It will also be helpful if you need to go back and make changes later.

Be consistent: Use the same method to handle missing values, outliers, etc., throughout your dataset.

5 Critical Methods For Effective Data Cleaning

To make sure you do not draw wrong conclusions, follow the 5 critical steps for effective data cleaning.

1. Data Formatting

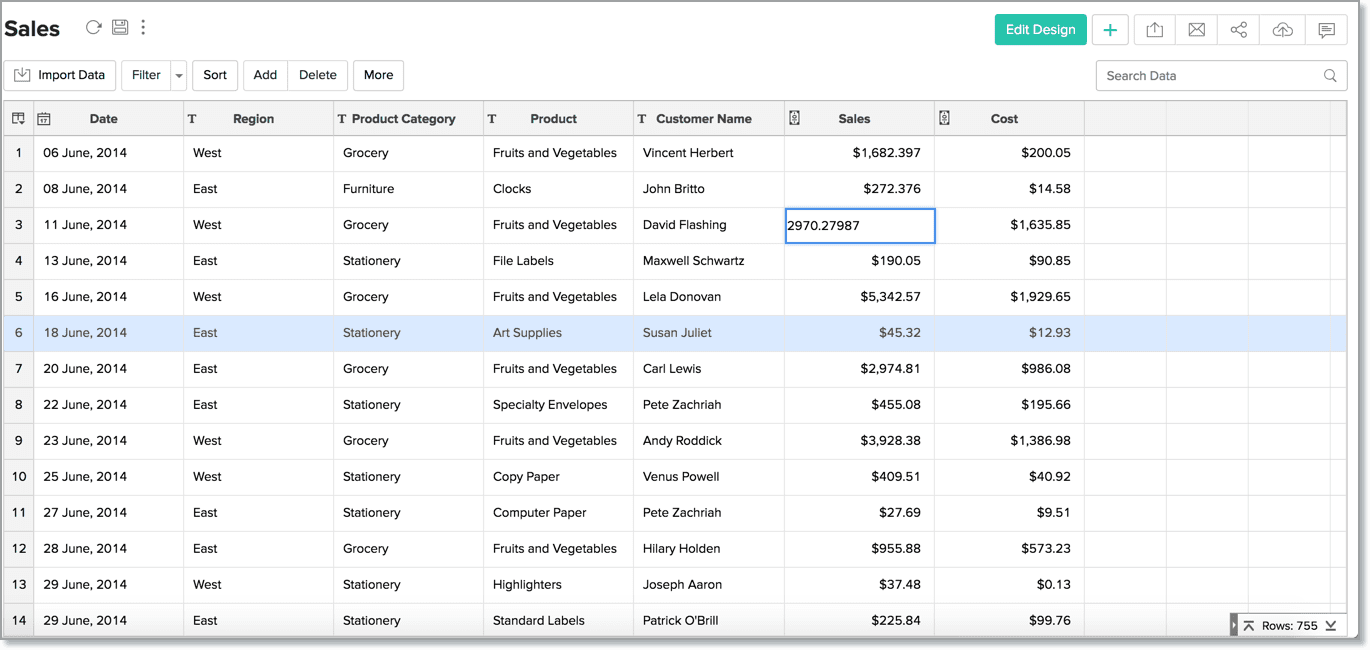

The first step in data cleaning is to assess the quality of your data. This includes checking for missing values, incorrect values, and inconsistencies in the format of your data. Once you have identified these issues, you can start to clean your data by making corrections and formatting changes.

There are a few different ways to format your data. One common method is to convert all values to lowercase letters. This ensures that there are no inconsistencies between different spellings of the same word. Another option is to standardize dates so that they are all in the same format. This makes it easier to perform calculations on dates, such as finding the difference between two dates.

Once you have made all of the necessary formatting changes, you should save your data in a new file.

2. Data Entry

Data entry is one of the most important steps in data cleaning. Data entry can be done manually or through an automated process. When choosing a data entry method, it is important to consider the accuracy and efficiency of the method.

Manual data entry is often more accurate than automated methods but can be very time-consuming. Automated methods, such as scanning or using optical character recognition, can be faster but are often less accurate.

It is important to validate data after it has been entered to ensure that it is complete and accurate. Data entry errors can introduce inaccuracies into your data set that can lead to incorrect results.

To avoid errors, it is best to use multiple data entry methods and to have trained personnel review the data for accuracy. By taking these steps, you can ensure that your data set is clean and accurate.

3. Data Normalization

Data normalization is the process of organizing data so that it can be effectively used in a database. The goal of data normalization is to reduce redundancy and improve the efficiency of data storage. Normalization typically involves splitting up data into multiple tables, each of which stores a specific type of information. For example, a customer database might have separate tables for customer information, order information, and product information.

Normalization often starts with identifying the different types of data that are stored in a database. This can be done by looking at the different fields in each table and determining what kind of information they contain. Once the different types of data have been identified, they can be grouped into separate tables. Each table should then contain only one type of information.

One important thing to keep in mind when normalizing data is that all relationships between the various pieces of data must be maintained.

4. Data Transformation

Data transformation is the procedure in which data is converted from one format/structure to another. Common data transformation tasks include:

- Converting data from a relational database to a flat file

- Converting data from a flat file to a relational database

- Converting data from one type of character encoding to another (e.g., ASCII to UTF-8)

- Converting dates from one format to another (e.g., MM/DD/YYYY to YYYY-MM-DD)

- Normalizing or standardizing data values (e.g., converting all phone numbers to the E.164 format)

There are many different tools and techniques that can be used for data transformation, depending on the specific needs of the project. Some examples of available tools are listed below: -Scripting languages (e.g., Python, Ruby, and Groovy) -XSLT stylesheets -Databases (e.g. SQL) -XML transformations (e.g., XSLT) -JavaScript (e.g. with Node.js) -CSV transformation tools -RDBMS metadata manipulation A common approach is to use a scripting language to write and execute transformation rules. For example, if the data needs to be normalized, then a set of scripts can be written that will handle each particular case (e. g. the data format, number of items per row, etc.). There are a number of open source frameworks that can aid with this task including Apache NiFi.

5. Data Aggregation

Data aggregation is the process of combining data from multiple sources into a single dataset. The goal of data aggregation is to make it easier to analyze large datasets by reducing the amount of data that needs to be processed.

There are a few different methods that can be used for data aggregation, including:

- Averaging: taking the mean of multiple values

- Sampling: selecting a subset of data points from a larger dataset

- Merging: combining two or more datasets into a single dataset

The method you use will depend on the type of data you are working with and your analysis goals. A common mistake when aggregating data is assuming that all values are equally important when some values may be more representative than others. It is important to carefully consider which method will best suit your needs before aggregating your data.

Averaging the mean, or average value in a sample dataset, is the sum of all values divided by the number of values. The mean is the most common measure of central tendency and is used when data tends to be symmetric about a central value. In other words, it is the number that represents the middle point between all values in your dataset. The mean can be calculated with or without outliers.

Conclusion

Data cleaning is an important step in any data analysis process. It is important to understand the different methods of data cleaning and when to use them. By following the steps outlined in this article, you can ensure that your data is clean and ready for analysis.

At Zuci Systems, we serve businesses of all sizes to help reveal trends and metrics lost from their mass of information with our data science and analytics services. We’ll help companies modify their business strategy and predict what’s next for their business – Real fast. Book a demo and change the way you analyze data!

Related Posts