Reading Time : 1 Mins

Achieving Software QA Excellence: A Practical Approach to Gap Analysis

An INFJ personality wielding brevity in speech and writing.

Is software or product quality a tester’s responsibility? Well, in some organizations, it is commonly held belief, while in others, it’s vice versa.

Developers often feel that testers are slowing them down and not providing clear information in their bug reports, while testers may get frustrated with developers who fail to keep them in the loop with code changes. And let’s not forget the Ops team.

While these stereotypes may not always be true, the communication gap between developers and testers is a real issue that can lead to inadequate testing and software failures. Unfortunately, the gap eventually leads to the accumulation of an organization’s technical debt due to the evolving code base, among other reasons.

This is where gap analysis in software testing comes into the picture.

Gap Analysis is an in-depth analysis of the existing testing or QA processes and practices followed in an organisation. The outcome of this activity is to identify areas for improvement and provide suggestions to enhance the existing processes or to recommend additional processes which would improve QA processes and metrics.

Why is QA gap analysis required?

Quality is everyone’s responsibility – Edward Deming

In his classic book, Dynamics of Software Development, Jim McCarthy illustrates the kind of thinking that typifies quality culture when he says that the goal of every team member (regardless of function) is the same: “to ship great software on time.”

If you’re part of the quality assurance team, it’s highly likely that you are an integral part of the larger engineering team spread across the world. Therefore, it becomes imperative for you to explain the significance of gap analysis not only for the QA process but also for the entire organization.

Below are some instances that necessitate the need for QA gap analysis:

- Review the software testing practices and capabilities across teams

- Assess the overall test process effectiveness and efficiency

- Determine the engineering maturity of the organization

- Improve test execution and maintenance

- Reduce defect leakage to production

- Improve user experiences

- Achieve significant testing ROI over time

- Implement better documentation and coding practices

- Improve agility and change management

Gap Analysis is a crucial step in identifying pain points in an application or existing processes and serves as a path towards improvement when stakeholders seek to refine their processes. The Gap Analysis Report offers comprehensive feedback, along with valuable suggestions and recommendations to enhance the current testing processes.

Pre-requisites for a QA gap analysis assignment

In order to successfully conduct a QA gap analysis assignment, it is essential to ensure that certain prerequisites are met. Different teams use different methods, but they all predominantly include:

- Clear understanding of the application or process being analyzed

- Access to all relevant documentation and information pertaining to the application or process

- QA experts with relevant domain or industry expertise

- Defined quality goals and benchmarks to compare against the current state

- Cooperation and participation of all relevant stakeholders

- Adequate resources, including time, budget, and tools, to conduct the analysis effectively.

When these prerequisites are fulfilled, QA gap analysis becomes easy to perform and yields effective results.

To ensure objectivity and avoid inherent biases, it is recommended that the QA consultant assigned to a project be independent of the team. Providing the consultant with relevant documentation regarding the project processes can aid in their understanding of the project. Additionally, setting up an appropriate environment for the consultant is essential to facilitate effective analysis.

A Gap Analysis Use Case

For a recent QA gap analysis engagement, the team from Zuci was keen to understand the “quality culture” at the client place. Here are the requirements laid down by Zuci’s team to the client:

- Zuci team would definitely need the key members from both Dev and QA teams to spend the required time to gain product knowledge and understand the engineering team practices.

- In addition to product/module owners, Zuci team’s consultant and test analyst may also like to interact with developers, architects, support teams, and Build/Release Engineering teams to understand the critical pieces of the application, if required.

- The client must ensure that application access, tool access, system access, test automation source access, and test data are available to the Zuci team during the assessment phase.

- The client must share production defects, test reports/metrics of previous releases for investigations.

Resource requirement from client side

1.Product & Engineering Overviews Phase:

- 2-3 Hours – Product Overview & KT [By: QA or BA]

- 2-3 Hours – Dev Process & Overview [By: Dev]

- 8-10 Hours – QA Process & Overview [By: QA]

2.Assessment Phase:

- 30 minutes – 1 Hour per day – Discussion / Interviews / Sampling on demand basis

Performing QA gap analysis

Good software quality is not about testing alone but encompasses other software engineering practices such as clarity in requirements, estimation, code quality, documentation, technical debt, software maintenance, individual skills, team competencies and other factors.

Once the scope of the gap analysis is defined, it is essential to agree on timelines for the engagement with all relevant stakeholders.

Next, it is useful to break the gap analysis consultation areas into smaller chunks, such as functional testing, automation testing, and quality processes. This ensures that all relevant areas are covered in the analysis.

To conduct a thorough analysis, it is necessary to obtain access to necessary resources, such as JIRA boards, defect reports, and CI/CD tools. These resources can provide valuable insights into the current state of the application or process being assessed. This is followed by presenting the first level gap analysis report.

Following the above steps, Zuci’s team has come up with initial observations and recommendations for one of the clients.

| First level observations | Recommendation |

|---|---|

| Standard coding practices are not followed | Standard coding practices must be followed, code documentation and code commenting (addition of headers in files). Static code analysis needs to be done. |

| Core documentation is not updated on a regular basis | Core documentation needs to be updated on a regular basis whenever there is a change in API exposed |

| Test case to every use case does not exist | Test case for every use case must be documented and approved by QA lead / manager |

| No validation or approval happens to add/remove a test case from/to the regression bed. | All the test cases which are part of regression test suite must be validated and approved by QA lead / manager |

| Not all test cases are automated | Not all test cases are automated, automated test cases needs to be robust |

| Testing notes from core are not circulated to custom QA team before new core build release | Testing notes from core for every release should be circulated to Custom QA team which will be helpful for custom team to plan their testing in advance. |

| Traceability matrix is not implemented | Traceability matrix needs to be followed as a process |

| Root Cause Analysis is not done | Root Cause Analysis needs to be done and documented, and corrective actions needs to be tracked to closure |

How QA gap analysis bridges the gap among the teams ?

QA gap analysis takes a bird’s eye view approach to assess software and quality gaps. This means talking to different teams involved in the SDLC, identifying dependencies among different teams, root causes for the defects, and helping to fix them.

Although implementing new technology may often appear to be the solution to many business or product quality problems, it is crucial to consider the long-term effects.

Like a band-aid on a wound, technology may provide a temporary fix; however, it does not address the root cause of the injury.

Companies should recognize the value of QA consultants as diagnosticians in identifying and resolving complex quality problems and situations. Contrary to what many think, gaps in quality have a lot to do with the people and teams involved.

QA Gap Analysis is a process that requires a thorough understanding of the existing software development and operations processes, including the Environment Setup, Automation Framework, and CI/CD Tools. To facilitate this analysis, the consultant must collaborate closely with the Development and DevOps teams to gather inputs and ensure that the recommended process is feasible.

For the gap analysis assignment with our client, Zuci’s team dived deep into the following areas to get a clear picture of their processes:

- Test engineering

- Test management

- Test governance & compliance

- Training & development

- Agile process

- Engineering process

- Change management

- Continuous improvement

- Risk management

Based on the analysis, here are high-level findings:

- The need for an end-to-end test strategy

- Focus on engineering automation is missing

- Visible signs of dependency on people

- Mechanism to monitor and measure process improvements can be better

- Collaboration efforts needs to be more effective

QA Gap Analysis helped me to look at how the best practices in CI/CD could help provide better outcomes for QA. – Supraja Sivaraj

Zuci’s approach to QA gap analysis

At Zuci, we believe in CARE (Check, Act, Refine, Evolve), a 360-degree approach to software quality. Our approach has been carefully built based on problems we have repeatedly encountered while working with our clients.

Read more on CARE here

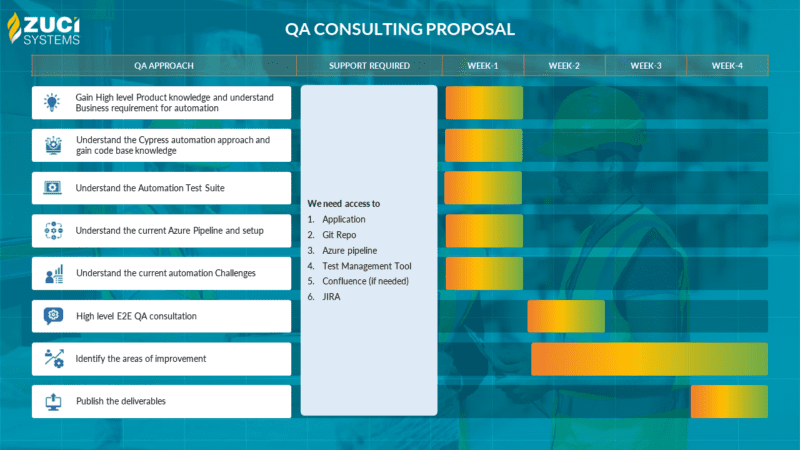

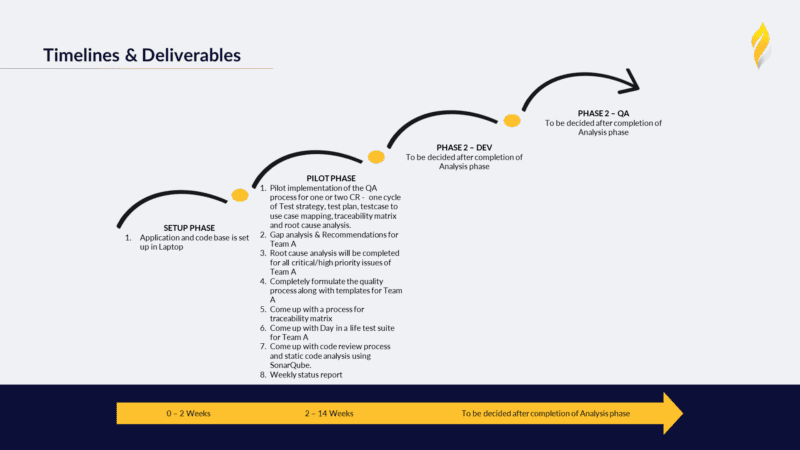

Based on the requirements, our approach typically spans from 4 to 14 weeks.

Here’s what Supraja Sivaraj, SDET lead has to say about her recent gap analysis consultation.

At Zuci, we like to take a phased approach to QA gap analysis. And to make sure our recommendations are implemented; we keep in touch with our clients on a regular basis. That way, we can bounce ideas off each other and make sure that our recommendations get implemented in a timely manner.

Below is an example of a QA consultation roadmap for a client, proposed by our team

After presenting our findings and recommendations, we create a roadmap of quantifiable engagement outcomes.

The following is another example of a phase 1 roadmap for one of our clients, a global e-commerce solution provider, who has become a long-term partner to date.

For this assignment, we had teams working from three different locations across the globe. We used Microsoft Teams, Outlook, and other project management tools to manage project communications and collaboration efficiently.

We received optimum support from the client’s product and services teams with team members’ adequate availability of time for meeting participation, recurring meetings, follow-ups, etc. The tools used for this assignment were SharePoint, JIRA, MS Outlook, MS Teams, MS Excel, and Tableau for data analysis.

For communication and collaboration, we had daily meetings, follow-up meetings, weekly management review meetings, Zuci whiteboard sessions, brainstorming sessions, and tasks management. Our processes included going through meeting recording, note-making, client’s product review, SharePoint documents review, QA artifacts review, and checklists preparation for the review meetings.

After extensive analysis, Zuci prepared a report that presents major findings and recommendations, QA scorecard, engineering process assessment, SWOT analysis, and ERICS (Eliminate, Reduce, Increase, Create, Stabilize).

Like to read the detailed report? Drop a Hello at sales@zucisystems.com

In software testing, the ultimate goal of conducting a gap analysis is to obtain an accurate overview of the health of the system. Breaking down the analysis process into well-defined phases can simplify the process and make test planning, execution, and monitoring easier.

It is recommended to start with only one controlled testing environment in the initial phases. After the successful implementation of this approach, multiple testing environments can be integrated. By taking this incremental approach to gap analysis, the risk of failure is minimized, and it becomes easier to move from simpler setups to more complex ones.

When it comes to software quality, it is most important to have a holistic approach. That is the reason why the Zuci team has been able to enable our clients to meet the desired quality, meet release schedules, and deliver seamless experiences for their users.

Facing software quality related challenges? Don’t worry, we’ve got you covered! Let our team at Zuci help you out and watch your user experience skyrocket.

So why wait? Let’s work together and make your software the best it can be!

Related Posts