Reading Time : 2 Mins

Data Pipeline Components, Types, and Use Cases

I write about fintech, data, and everything around it

Data is essential in helping organizations build better strategies and make informed decisions in today’s modern marketplace. It allows businesses to be strategic in their approaches, back up arguments, find solutions to everyday problems, and measure the effectiveness of a given strategy.

In addition to its advantages, managing and accessing data is a critical task with numerous undertakings. And it is where Data Pipeline proves to be most helpful.

Data Pipeline allows data scientists and teams to access data based on Cloud platforms. Data pipeline tools control data from a starting point to a specified endpoint. It enables you to collect data from many sources to create beneficial insights for a competitive edge.

Even the most seasoned data scientists can rapidly become overwhelmed by the significant data volume, velocity, and diversity. For this reason, organizations employ a data pipeline to turn raw data into analytical, high-quality information.

To deepen your understanding of the data pipeline, we have put together this definitive guide that covers its components, types, use cases, and everything.

Then this guide is for you. Let’s dive right in.

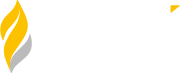

What is a Data Pipeline?

A data pipeline is a set of tools, actions, and activities implemented in a specific order to collect raw data from multiple sources and move it to a destination for storage and analysis. A data pipeline allows it to automatically gather data from several sources, alter it, and combine it into a single, high-performance data storage.

The five critical components of a data pipeline are preprocessing, storage, analysis, applications, and delivery. These five components help organizations work seamlessly with data and generate valuable insights.

Why Should You Implement a Data Pipeline?

A data pipeline helps you make sense of big data and transform it into high-quality information for analysis and business intelligence. Regardless of size, all businesses can use data pipelines to remain competitive in today’s market. This data can be further utilized to understand client needs, sell goods, and drive revenue. Since it offers five essential elements, data pipeline integration plays a significant role in the process and enables businesses to manage enormous amounts of data.

Data is expanding quickly and will keep expanding. And as it happens, the data pipelines will help turn all the raw data into a daily stream of data. Applications, data analytics, and machine learning can all utilize this modified data. If you intend to utilize this data, you will require data integration, which requires a data pipeline.

Data Pipeline vs ETL

The phrases ETL and Data Pipeline are sometimes used interchangeably, but they differ slightly. ETL refers to extract, transform, and load. ETL pipelines are widely used to extract data from a source system, convert it following requirements, and load it into a Database or Data Warehouse mainly for Analytical purposes.

- Extract: Data acquisition and ingestion from original, diverse source systems.

- Transform: Transferring data in a staging area for short-term storage and transforming data so that it complies with accepted forms for other applications, such as analysis.

- Load: Loading and transferring reformatted data to the target drive.

Data pipeline, on the other hand, may be thought of as a more general phrase that includes ETL as a subset. It alludes to a system that is employed to transfer data across systems. There may or may not be any alterations made to this data. Depending on the business and data requirements, it may be handled in batches or in real-time. This information may be put into various locations, such as an AWS S3 Bucket or a Data Lake.

It may even activate a Webhook on another system to initiate a particular business activity.

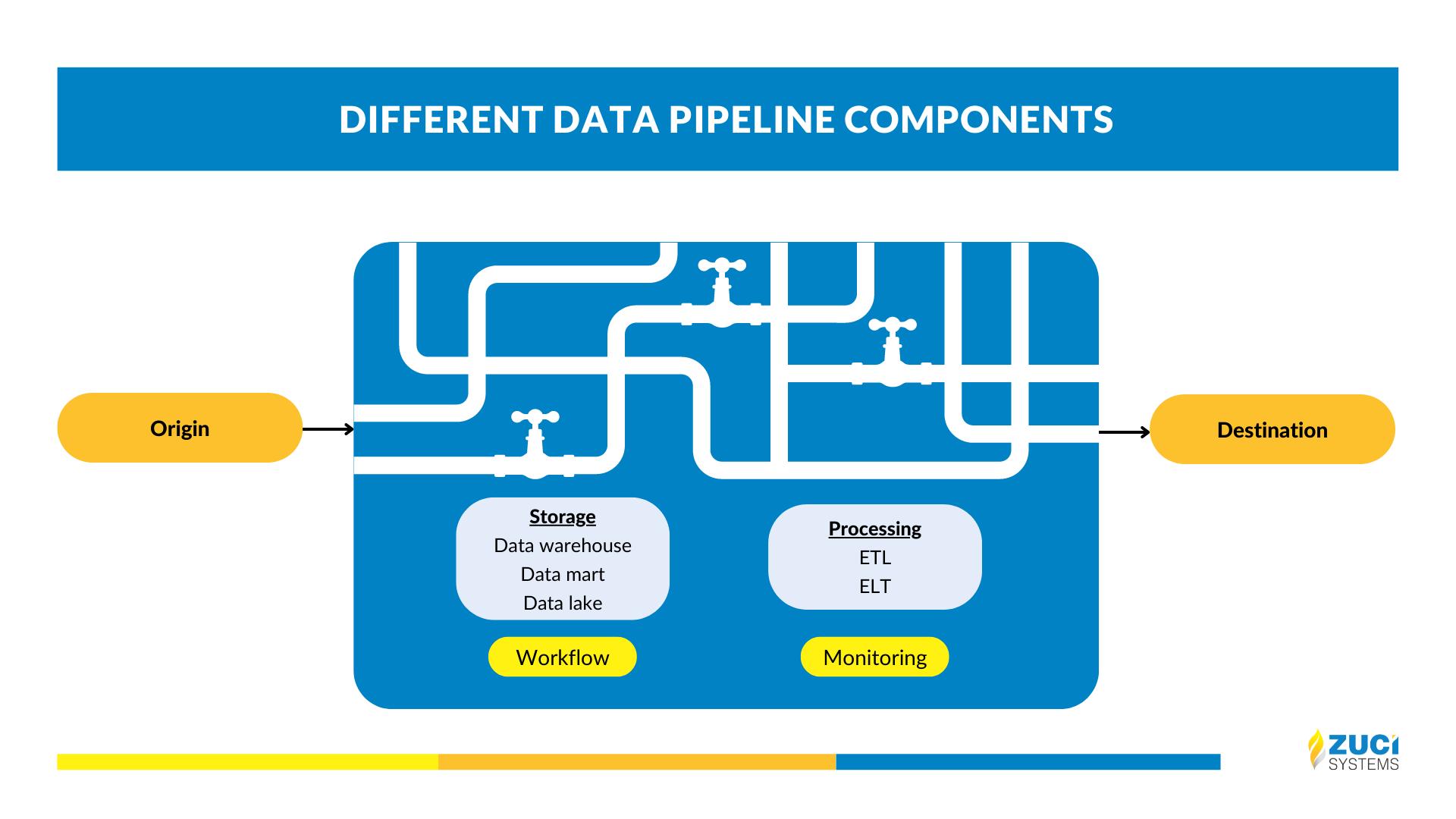

Different Data Pipeline Components

A data pipeline consists of various components. All these data pipeline components have their technical requirements and challenges to overcome. You must first be familiar with the components of a data pipeline to comprehend how it functions. A typical data pipeline’s structure resembles this:

1. Origin

In a data pipeline, the origin is where data is first entered. It is often driven by data store design. Most pipelines originate from storage systems like data warehouses, data lakes, transactional processing applications, social media, APIs, and IoT device sensors.

The ideal place for data should usually be where it makes the most sense to lower latency for near real-time pipelines or to optimize transactional performance/storage costs. If transactional systems deliver trustworthy and timely information required at the pipeline’s destination, they should be regarded as an origin(s).

2. Destination

A destination is an endpoint to where data is finally transported to. The endpoint can be anything from a data warehouse to a data lake. It mostly depends on the use case. This is what the entire process is aimed for. When creating your data pipelines, you should start here.

Determining origin and destination go hand in hand, with endpoint needs affecting the discovery of data sources and data source selection influencing the choice of pipeline endpoints. For instance, it is necessary to consider both the timeliness requirements at the pipeline’s destination and the latency restrictions at the pipeline’s origin.

3. Dataflow

Origin and destination together determine what goes in the pipeline and what comes out, whereas dataflow is what specifies how data moves through a pipeline. In simple words, dataflow is the order in which processes and stores are utilized to transport data from a source to an endpoint. It refers to the transfer of data between points of origin and destinations as well as any modifications that are made to it. The three phases of dataflow are:

- Extract: It is defined as the process of extracting all essential data from the source. For the most part, the sources include databases such as MySQL, Oracle, MongoDB, CRM, ERP, and more.

- Transform: It is the process of transforming the essential data into a format and structure fit for analysis. It is done with a better understanding of data with the help of business intelligence or data analysis tools. It also includes activities like filtering, cleansing, validating, de-duplicating and authenticating.

- Load: It is the process of storing the converted data in the desired location. Loading is a term that is frequently used to describe data warehouses like Amazon Redshift, Google BigQuery, Snowflake, and others.

4. Storage

Storage refers to all the systems that are used to maintain data as it moves through the various stages of the data pipeline. The factors that influence the storage options available are the volume of data, how frequently and thoroughly a storage system is searched, and how the data will be used.

5. Processing

Processing refers to the steps and activities followed to collect, transform, and distribute data across the pipeline. Data processing, while connected to the dataflow, focuses on the execution of this movement. Processing converts input data into output data by completing the proper steps in the correct order. This data is exported or extracted during the ingestion process and further improved, enhanced, and formatted for the intended usage.

6. Workflow

The workflow outlines the order of activities or tasks in a data pipeline and how they are interdependent. You will greatly benefit here if you know jobs, upstream, and downstream. Jobs refer to the discrete units of labor that finish a certain task, in this case changing data. The upstream and downstream refer to the sources and destinations of data traveling via a pipeline.

7. Monitoring

The main objective of monitoring is to examine the efficiency, accuracy, and consistency of the data as it moves through the various processing stages of the data pipeline and to ensure that no information is lost along the way. It is done to ensure that the Pipeline and all of its stages are functioning properly and carrying out the necessary tasks.

Watch this video from our Technical Lead – Business Intelligence, Balasubramanian Loganathan, to learn 8 important steps to build an optimal data pipeline.

Data Pipeline Architecture

Businesses are moving in the direction of implementing cutting-edge tools, cloud-native infrastructure, and technologies to better their business operation. This calls for transferring huge amounts of data. Automated Data Pipelines are essential elements of this contemporary stack that enable businesses to enrich their data, collect it in one location, analyze it, and enhance their business intelligence. This Modern stack includes:

- An Automated Data Pipeline tool

- A destination Cloud platform such as Databricks, Amazon Redshift, Snowflake, Data Lakes, Google BigQuery, etc.

- Business Intelligence tools like Tableau, Looker, and Power BI

- A data transformation tool

Ingestion of data, transformation and storage are the three main phases of the data pipeline architecture.

1. Data ingestion

To gather data (structured and unstructured data), several data sources are employed. Producers, publishers, and senders are common terms used to describe streaming data sources. Before collecting and processing raw data, it is always preferable to practice storing it in a cloud data warehouse. Businesses may also update any older data records using this technique to modify data processing tasks.

2. Data Transformation

In this stage, data is processed into the format needed by the final data repository through a series of jobs. These activities guarantee that data is regularly cleansed and transformed by automating repetitive workstreams, such as business reporting. In the case of a data stream in nested JSON format, for instance, key fields may be retrieved during the data transformation step from nested JSON streams.

3. Data Storage

Following storage, the altered data is made accessible to various stakeholders inside a data repository. Within streaming data, the transformed data are frequently referred to as customers, subscribers, or receivers.

Types of Data Pipeline architecture

To create an effective data pipeline, you must first be aware of its architectures.There are different data pipeline architectures that offer different benefits. And we’ll look at each one of them in more detail below:

ETL Data Pipeline

The term “ETL pipeline” refers to a series of operations that are carried out to transfer data from one or more sources into a target database, typically a data warehouse. The three steps of data integration, “extract, transform, and load” (ETL), are intertwined and allow data transfer from one database to another. After the data has been imported, it can be analyzed, reported, and used to inform strategic business decisions.

Use cases :

- Facilitate the transfer of information from an older system to a modern data storage system.

- By collecting all the data in one place, new insights can be gained.

- Complementing information stored in one system with information stored in another.

ELT Data Pipeline

ELT (Extract, load, and transform) describes the steps used by a data pipeline to duplicate content from the source system into a destination system, like a cloud data warehouse.

Use cases:

- Facilitating on-the-fly reporting

- Facilitating examination of data in real-time

- Activating supplementary software to carry out supplementary enterprise procedures

Batch Data Pipeline for Traditional Analytics

Organizations use batch data pipelines when they regularly need to move a large amount of data. For the majority, the batch data pipeline is executed on a predetermined schedule. For instance, it is used when data needs to be processed every 24 hours or when data volume hits a predetermined threshold.

Use Cases:

- Constructing a data warehouse for monthly analysis and reporting

- Integration of data across two or more platforms

Stream-based Pipeline

Real-time or streaming analytics involves drawing conclusions from swift data flows within milliseconds. Contrary to batch processing, a streaming pipeline continuously updates metrics, reports, and summary statistics in response to each event that becomes available. It also continuously ingests a series of data as it is being generated.

Use cases:

- IT Infrastructure Monitoring and Reporting

- Troubleshooting software, hardware, and more through log monitoring

- Event and security information management (SIEM) is the practice of monitoring, measuring, and detecting threats by analyzing logs and real-time event data.

- Retail and warehouse stock: managing stock in all stores and warehouses and making it easy for customers to access their orders from any device

- Pairing rides with the most suitable drivers in terms of distance, location, pricing, and long waits requires integrating data about locations, users, and fares into analytic models.

Lambda Pipeline

This pipeline combines a batch pipeline with a streaming pipeline. Because it enables programmers to take into consideration both Real-time Streaming use cases and Historical Batch analysis, this architecture is frequently utilized in Big Data contexts. The fact that this design promotes keeping data in its raw form means that developers may run new pipelines to fix any problems with older pipelines or incorporate additional data destinations depending on the use case.

Use cases:

- Rapid conversion

- Users may not always require specs, manuals, or transaction data in one standard format. On-demand document creation is typically easier. Lambda software can quickly and easily retrieve, format, and transform content for online or download.

- Backups and daily duties

- Lambda events that are scheduled are fantastic for performing maintenance on AWS accounts. Utilizing Lambda, it’s possible to swiftly create commonplace activities like backup creation, idle resource monitoring, and report generation.

Big Data pipeline for Big Data analytics

Developers and designers have had to adjust to Big Data’s growing diversity, volume, and speed. Big Data signifies a large volume. This large amount of information can enable investigative reporting, monitoring, and data analytics.

Big Data Pipelines can transform large volumes of data, unlike regular Data Pipelines. Big Data Pipelines process information in streams and groups. Regardless of the method, a Big Data Pipeline must be scalable to meet business needs.

Use cases:

- Construction businesses keep meticulous records of everything from labor hours to material expenditures.

- Stores, both physical and virtual, that monitor consumer preferences.

- Big Data is used by the banking and financial sectors to forecast data trends and enhance customer service quality.

- Healthcare systems sift through mountains of data, searching for new and improved therapies.

- There are several ways in which companies operating in the social, entertainment, and communications industries use Big Data, such as the provision of real-time social media updates, the facilitation of connections among smartphones, and the enhancement of HD media streaming.

Advantages and Disadvantages of Data pipeline architecture

| Examples of Data pipeline architecture | Advantages | Disadvantages |

| ETL Data pipeline |

|

|

| ELT Data Pipeline |

|

|

| Batch Data Pipeline |

|

|

| Stream-based Pipeline |

|

|

| Lambda Pipeline |

|

|

| Big data Pipeline |

|

|

Data pipeline examples

It is one thing for you to gather data, but quite another if you are unable to understand and analyze it in a way that is advantageous to the business. The contemporary data pipeline is useful in this situation. Scaling your data and drawing inferences from it are essential skills for every organization, small or large. The ability to manage and deploy scalable pipelines will enable you to discover insights you never imagined possible and will help you realize the full potential of your data.

The infrastructure of digital systems is data pipelines. Pipelines transport data, process it, and store it so that businesses can gain useful insights. However, data pipelines must be updated so that they can handle the increasing complexity and volume of data. However, teams will be able to make better decisions more quickly and acquire a competitive edge with the help of modern and efficient data pipelines once the modernization process has been completed.

List of companies using Data pipeline:

Uber

Uber started working on its data pipeline, Michelangelo, in 2015. It has enabled the company’s internal teams to create, implement, and manage machine learning solutions. It was created to be able to handle the data management, training, assessing, and deployment phases of the machine learning workflow. Using this information, it can also forecast future events and track them.

Prior to the development of Michelangelo, Uber had difficulty developing and deploying machine learning models at the scope and scale of their business. This constrained Uber’s use of machine learning to what a small number of talented data scientists and engineers could create in a limited amount of time. This platform oversees not just Uber Eats but also hundreds of other comparable models with predictive use cases used by the whole organization.

Michelangelo discusses food delivery time estimates, restaurant rankings, search rankings, and search autocomplete using UberEATs as an example. Before placing an order and again at each step of the delivery process, this delivery model informs the customer how long it will take to make and deliver a meal. The data scientists at Uber Eats use gradient-boosted decision tree regression models to forecast this end-to-end delivery time on Michelangelo. This forecast was made using the information on the time of day, the average meal preparation time over the previous week, and the average meal preparation time over the previous hour.

GoFundMe

GoFundMe is the largest social fundraising firm in the world, having amassed over 25 million donors and over $3 billion in donations. Despite this, it lacked a central warehouse to contain the information from its relational databases on the backend, online events and analytics, support service, and other sources, which totaled about one billion occurrences each month. Without this centralization, these metrics were siloed and their IT staff was unable to have a thorough understanding of the direction their business was taking.

To achieve this view, GoFundMe was aware that they required a flexible and adaptive data flow. In the end, their pipeline provided them with all of the connectors they need for their data sources, as well as the flexibility to create unique Python scripts that would allow them to edit their data as needed, giving them total control. This pipeline provided GoFundMe with flexibility as well as integrity because it had measures in place to prevent the use of personalized ETL scripts, which had the potential to alter data. To prevent data from becoming lost, damaged, or duplicated inside the pipeline, one feature is the ability to re-stream it.

AT&T

Millions of people, federal, state, and local governments, huge corporations, and other organizations rely on AT&T as their primary provider of communications and television services. They rely largely on a smooth data flow to offer these services. Additionally, they host the data centers of several clients, offer cloud services, and assist with IVR solutions. AT&T was required to keep these records for a longer amount of time due to a change in the Federal Trade Commission Telemarketing Sales Rule, which required them to reconsider their data-moving technologies.

Since AT&T outsources the majority of the services provided by its contact centers to outside contractors, moving this material safely from 17 call centers to their data centers was a difficulty. They needed dependable data movement and fast file transfer to transmit these audio recordings. In the end, AT&T required a pipeline with increased transmission speed and capacity as well as ease of installation, automation, and scheduling.

The Federal Trade Commission mandate was ensured by AT&T’s new pipeline, which made it simple to transport tens of thousands of enormous audio files per day. In-depth reporting, cutting-edge security, a plethora of configuration choices, and configurable workflows are also included.

Data Pipelines Implementation

Your data pipeline can be built on-premises or implemented utilizing cloud services from suppliers.

Open-source Data Pipeline

You can employ open-source alternatives if your business considers commercial pipeline solutions to be prohibitively expensive.

Advantages

- The Price of Data Analytics Software

- Downloading, studying, and using open-source software is completely cost-free. So, business analysts and engineers may rapidly and cheaply experiment with a variety of open-source solutions to see what works best.

- Customization unrestricted

- Open-source programmes can be difficult to use, but you can edit the code.

- Open-source tools are flexible for data processing.

Disadvantages:

- No commercial backing

- Open-large source’s community is excellent, but occasionally you need a fast solution from a trusted source.

- Depending on its popularity and influence, some open-source technology is obsolete, scarcely maintained, or old. Perhaps those who monitored the codebase and checked community code additions become too busy or got a new job.

Use Cases:

- Leverage open-source data pipeline technologies while maintaining the same use cases for batch and real-time

On-premises data pipeline

To have an on-premises data pipeline, you must purchase and set up software and hardware for your personal data center. Additionally, you need to take care of the data center’s upkeep yourself, handle data backup and recovery, evaluate the health of your data pipeline, and expand storage and processing power. Even though it takes a lot of time and resources, this method will provide you with complete control over your data.

Advantages

- Only form new clusters and allocate resources when absolutely necessary.

- The option to restrict tasks to specific times of day.

- Security for both stored and transmitted information. The access control mechanism in AWS makes it possible to precisely regulate who has access to which resources.

Disadvantages

- Preparation That Takes A Lot Of Time

- Challenges with Regards to Compatibility

- Expenses Incurred for Upkeep

Use cases:

- Sharing information about sales and marketing with customer relationship management systems to improve customer care.

- Transferring information about consumers’ online and mobile-device usage to recommendation-making platforms

Final Takeaways

You now have a firm understanding of the data pipeline and its components that make up the modern pipelines. In today’s marketplace, all firms have to deal with a massive data volume. To create a data pipeline from scratch, you will need to hire a lot of resources and invest a lot of time. It is not only expensive but time-consuming, too. If you want to utilize your data and create an efficient data pipeline, you can make use of cloud-based Zuci solutions. It will reduce your operating costs, bring efficiency to your data operation, and save time.

We hope you like this article and learn how data labeling is an intrinsic part of data science! Book a discovery call for our data engineering services today and get ahead of the competition. Make it simple & make it fast.

Related Posts